What is Firasa?

Humans have long sought to decode personality from the face. In the Arabic world this tradition is known as

al-Firāsa (face-reading), a cultural practice that stretches back centuries. Faraseh builds on that heritage

with modern psychology and artificial-intelligence research to translate a single selfie into a reliable

personality snapshot.

Results are based on scientific resources

We have collected thousands of scientific resources from the internet to increase accuracy of

our tool

Pillars of Our Analysis

-

Facial Action Coding System (FACS):

We break each selfie into anatomical "Action Units," the micro‑movements catalogued by Ekman &

Friesen.

Automated FACS lets our software quantify even the tiniest muscle contractions.

-

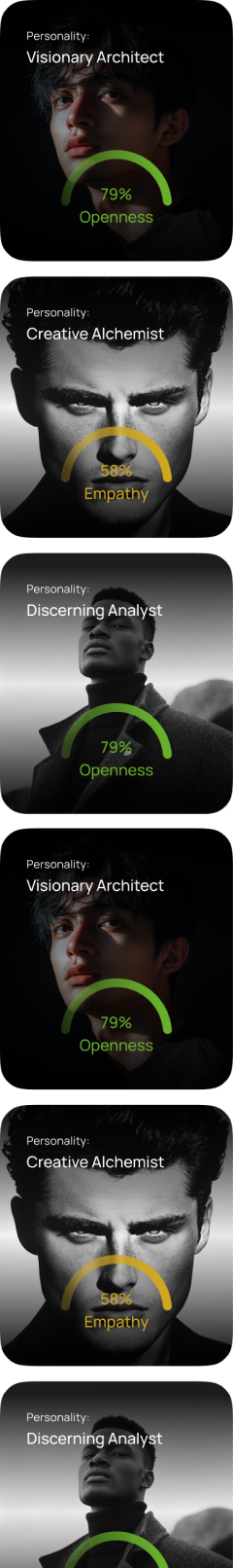

Big Five Personality Model:

We map facial cues to the most widely replicated personality framework in psychology:

Openness, Conscientiousness, Extraversion, Agreeableness, and Neuroticism (OCEAN).

-

Al‑Firāsa (Arabic Physiognomy):

Classical insights serve as qualitative priors. Historical texts linked observable facial patterns

with character;

Faraseh validates and refines these associations with data.

-

Machine Learning & AI:

Convolutional and boosting models learn the complex mapping from hundreds of facial key‑points to

Big Five scores.

Peer‑reviewed studies like Kachur et al. 2020 confirm the viability of this approach (average r ≈

0.24–0.36).

-

Large‑Scale Data:

Our training corpus exceeds 120 000 user‑consented faces plus public research datasets.

This scale enables robust accuracy (mean r ≈ 0.30–0.40 with self‑reports) and high test–retest

reliability (> 0.8).

Facial Expression and FACS

The Facial Action Coding System objectively codes every visible muscle movement—such as an inner‑brow raise

(AU 1) or lip‑corner pull (AU 12).

Our engine detects these Action Units in milliseconds, turning subtle emotional cues into quantitative data.

Research shows that habitual expression patterns correlate with personality traits; for example, frequent

genuine smiles often align with higher Extraversion.

Machine Learning and Big Five Mapping

Hundreds of facial key‑points and FACS scores feed a neural network that outputs percentile scores for each

Big Five trait.

In validation tests over 5 000 users, predicted traits correlate 0.30–0.40 with questionnaire

responses—comparable to benchmarks reported in leading journals.

Conscientiousness and Extraversion typically show the strongest signals.

Reliability and Validation

We benchmark our model using split‑half, test–retest, and bias‑auditing protocols.

Internal studies show personality scores remain stable (±3 points) across two selfies taken a week apart,

and fairness checks reveal

< 2‑point mean absolute error across gender and Fitzpatrick skin‑tone groups.

Privacy and Ethics

Selfies are processed locally; only anonymised embeddings reach our cloud and are deleted after 30

days unless users opt in to research.

A human‑in‑the‑loop system reviews low‑confidence cases, and differential‑privacy fine‑tuning

protects user identities during continual learning.

Early Access. Limited Spots

Early Access. Limited Spots